Late in the afternoon of May 11, 1997, in front of the cameras of a small television studio 35 floors up a Manhattan skyscraper, Garry Kasparov sat down at a chessboard. The boisterous and temperamental World Chess Champion had never lost an official match, but entering the sixth and final game he was tied with his opponent at two games each (the third game having ended in a draw). To the reporters present and chess fans following the broadcast in a nearby auditorium, as well as those viewing it live around the world, Kasparov’s frustration was evident as he sighed and held his head in his hands. Opposite him sat a software engineer, whose moves had been baffling Kasparov all week. But the engineer was not Kasparov’s actual opponent. He was merely the human puppet of the IBM computer Deep Blue, a pair of hulking black boxes designed to do one thing: defeat Garry Kasparov—and use the publicity to sell more IBM computers.

When Deep Blue’s programmers signaled their readiness, the clock started for game six. Because Deep Blue was playing white, it moved first. Following the computer’s instructions, the software engineer sent a single pawn forward. Kasparov responded with the Caro-Kann defense, a common opening sequence but one he rarely used. After trading pieces in the turns that followed, Deep Blue maneuvered a knight near one of Kasparov’s pawns. Instead of capturing it, Kasparov placed his queen next to it. Chess analysts immediately recognized the blunder; if Kasparov had just taken the knight with his pawn or moved his queen to a more advantageous space, he would have been in a strong position. Instead, Deep Blue capitalized on the mistake and forced Kasparov to sacrifice his queen eight moves later. With Deep Blue’s own queen cutting off all lines of escape, Kasparov was defeated. He refused to look the engineer in the eye as he left the table.

Newspaper headlines around the world trumpeted that a computer had defeated the chess grandmaster whose dominance in the game was matched only by his sense of superiority. The human brain had been dethroned by a machine in one of the most intellectually challenging games ever invented. But Kasparov saw it differently. As the chess master’s publicist Mig Greengard told National Public Radio years later, Kasparov initially suspected IBM had cheated. During the first game of the match, boxed into a corner, Deep Blue made an illogical move, sacrificing one of its pieces to gain a short-term advantage. Kasparov was surprised because it was the kind of desperate play only a human would make. But why would a computer panic? After all, it could analyze 200 million possible moves every second, and its chess algorithm was based on the strategy of Joel Benjamin, the grandmaster who advised IBM during Deep Blue’s programming. To Kasparov the only logical explanation for the sacrifice was human interference.

Newspaper headlines around the world trumpeted that a computer had defeated the chess grandmaster whose dominance in the game was matched only by his sense of superiority.

But the alternative was even more alarming: if Deep Blue made that move on its own, then it showed a flash of humanlike ingenuity and creative thinking. Those two possibilities rattled around in Kasparov’s head during the deciding game, unsettling him and ultimately contributing to the blunder that lost him the match and the $700,000 prize. During his NPR interview Greengard voiced the question that stumped Kasparov: “How could something play like God, then play like an idiot in the same game?”

Long after Deep Blue was retired, Murray Campbell, one of the computer’s engineers, revealed that the illogical sacrifice was neither human interference nor artificial ingenuity. It was just a glitch. Deep Blue was designed to review every possible move and, using a rating system devised by Benjamin, pick the one with the highest chance of leading to victory. But the program was not perfect, and the developers installed a safeguard to make sure Deep Blue kept playing even if it couldn’t work out the best move. What Kasparov thought of as a spark of human intelligence was simply a computer algorithm getting hung up; unable to calculate the best move, the program instead produced a move at random.

Engineers packed away their slide rules with the arrival of the punch card–driven IBM 604 electronic calculator, capable of addition, subtraction, multiplication, and division, 1951.

IBM built Deep Blue to use a guide of opening moves and a system that rated the effectiveness of each move based on Benjamin’s knowledge of chess. From this starting point Deep Blue relied on the greatest advantage a computer has over a human player: the ability to crunch a lot of numbers very, very quickly. Deep Blue was able to challenge Kasparov by simulating millions of possible plays every second and picking the best outcome. Computer scientists call this approach brute force, which essentially is a souped-up type of trial and error. If you were trying to guess your neighbor’s four-digit ATM passcode, you might use common sense and start with the year they were born or their house number. A computer using brute force would try every possible combination of numbers starting from the beginning: 0000, 0001, 0002, and so on. Similarly, a human chess player might rely on past experience with a certain opponent or a gut feeling to decide on a play, but a computer program like Deep Blue must simulate millions of possible moves and their ripple effects before making a move.

Cyborg v. Cyborg

AI programs have advanced a lot since Kasparov’s chess match. Deep Blue’s capabilities appear negligible compared with the chess programs that today come preinstalled on any laptop. But the existence of chess-playing computers hasn’t stopped humans from playing chess and enjoying it. In fact, chess provided one of the first opportunities to show that a task is easier to complete, and potentially more fulfilling, when AI and humans work together rather than in competition.

Even though Kasparov was defeated by Deep Blue in 1997, he still recognized the machine’s limitations. Deep Blue could sort through a database of proven chess moves, but it couldn’t adapt to a new strategy on the fly. Kasparov was also aware of his own weaknesses. He was often distracted by having to precisely recall hundreds of openings and strategies, such as the one he famously botched. Within a year he had a flash of insight: if people used computers while playing chess, they could play at a high level without worrying about memorization and small mistakes. In 1998 Kasparov developed a type of chess called “advanced chess” where human players could supplement their decisions with a computer assistant. These human-computer hybrid teams are almost universally better when competing against opponents who are solely human or solely machine. The chess world called them “centaurs” and, more recently, “cyborgs.”

The idea of manufactured intelligence has existed for centuries, from Greek automatons to Frankenstein, but in the decades following World War II a collection of academics, philosophers, and scientists set about the task of actually creating an artificial mind. Perhaps the most lasting contribution of these early researchers was not scientific but semantic. Artificial intelligence (AI)—and all the different, sometimes conflicting, ideas that term conjures—was originally meant to entice those who might bring machine-based intelligence into being. But in the process the words themselves set up conflicting expectations that still influence how we think about intelligence. Is a robotic vacuum that scurries around chair legs intelligent? Is a machine that bests a chess grandmaster intelligent? Is true AI a “superintelligence” that may threaten our existence? Or is AI something that thinks and feels and wants on its own? The answer to such questions, it turns out, depends on who is doing the asking.

In 1904 British psychologist Charles Spearman defined general intelligence as the capacity to learn and use common sense. At the time, many psychologists measured intelligence by focusing on specific skills independently, such as the ability to solve math problems and the ability to navigate social situations. Spearman argued that to truly capture a person’s intelligence, researchers must assess his or her combined abilities. This schism—whether to judge intelligence by the morsel or the meal—anticipated the disputes among computer scientists and philosophers that came half a century later. If we apply Spearman’s definition to Deep Blue and other computer programs that have only one skill, such as playing chess or guessing passwords using brute force, those programs can never be considered intelligent.

Ben Goertzel, a computer scientist who in his own words is “focused on creating benevolent superhuman artificial general intelligence,” disagrees with Spearman’s interpretation of intelligence. In 2009, while giving a lecture at Xiamen University in China, Goertzel declared that general intelligence is a type of behavior, one whose only requirement is “achieving complex goals in complex environments.” As he sees it, intelligence should be measured by a thing’s ability to perform a task, be that thing natural or artificial. Since animals, bacteria, and even computer programs all complete goals (from replicating DNA to autocorrecting text messages), they can be considered intelligent.

You can follow this line of thinking to some comical conclusions. For example, something as simple as a flowchart can qualify as intelligent. Alan Turing, the British mathematician who designed the first working computer, created a chess-playing algorithm before he had a computer to test it on. Instead of circuits, he used stacks of paper with instructions for each move, depending on the opponent’s previous move. This is all any computer program does, albeit with many millions more pieces of digital paper as circuits and a faster processing speed.

Although the flowchart analogy vastly simplifies how a computer calculates (a series of switches turning on and off), in many ways it echoes how some have imagined the function of the human brain at the most basic level. Is consciousness just the product of an incredibly dense series of neurons being turned on and off? If that is the case, it is easy to conclude that all we need to achieve artificial consciousness is to scale up the complexity of a computer until it is on par with the tangle of gray matter in our heads, just as Turing’s flowchart can be scaled up to a program that can beat a grandmaster.

But if we accept the computer scientist’s view that a simple brute-force program can be considered a type of intelligence, what else qualifies? AI is a relatively young field and one still evolving, so there is not a unanimous definition. The emerging consensus, supported by Goertzel and other researchers and put forth in the widely used textbook Artificial Intelligence: A Modern Approach, divides AI into three levels, each one paving the way for the next. The first category is artificial narrow intelligence (ANI), which includes the computer programs we have today. Deep Blue is ANI because it performs a specific, narrow task. If you want to see ANI in action, turn on your computer. Go to Amazon.com, log into your account, and scroll down the page. You’ll find sections labeled “Inspired by your shopping trends” and “Recommendation for you.” You probably haven’t searched for any of the items suggested, but they eerily reflect your purchasing habits on Amazon. Did you recently buy new rain boots? Amazon might suggest ordering an umbrella. Did you briefly consider getting a treadmill? Amazon could recommend a series of dieting books instead. It is constantly collecting data and learning more about you and everyone else who has searched for anything on its website.

The next level of AI is called artificial general intelligence, or AGI. This is what many of us first imagine when we think of AI, but no one has managed to accomplish it yet. In theory AGI would be a program that could learn to complete any task. After Deep Blue defeated Kasparov, imagine its programmers then asking it to identify someone’s face in a photograph. That is AGI, a machine with the flexibility and ingenuity of a human brain.

The final level of AI is artificial superintelligence (ASI). Any computer program that is all-around smarter than a human qualifies as ASI, although we can’t truly know what that program might look like. The theory goes that once software is slightly more intelligent than us, it will be able to exponentially improve itself until it is infinitely more intelligent than us, an event dubbed the singularity by computer scientist and futurist Ray Kurzweil.

In reality, AI technology is shaping the world in ways vastly different from that prophesied by pessimistic filmmakers and philosophers.

The prospect of a singularity scares people and has led to an abundance of funding for philosophers and scientists dedicated to figuring out how to ensure that when ASI arrives, it comes with a sense of ethics. Many researchers, such as Nick Bostrom of the Oxford Future of Humanity Institute, believe that ASI will appear later this century. A philosopher and futurist, Bostrom is afraid that ASI lacking preprogrammed morality will be capable of unintended destructive actions; without a code of ethics an AI’s only purpose will be to complete whatever goal its programmers give it. Such a state of affairs should sound familiar to movie fans. In the Terminator series Skynet is an ASI that attempts to destroy a humanity it sees as a threat to the world and itself. It’s a common trope, logic taken to illogical ends, and futurists perceive it as a real threat. Their solution is to instill human values, including the value of life, into an ASI’s programming. But how? Bostrom doesn’t know yet, and that’s what his Future of Humanity Institute is trying to figure out.

The task of preventing a menacing ASI has been taken up by many well-known scientists and entrepreneurs. In July 2015 Elon Musk, Stephen Hawking, and Steve Wozniak were among dozens who signed an open letter discouraging the creation of autonomous weapons. Musk alone has given millions of dollars in grant money to projects focused on AI safety.

The flaw in such efforts and in Bostrom’s prophecies is that they do not reflect the trajectory of actual AI work. The majority of the computer scientists and programmers building tomorrow’s artificial intelligence think AGI is, at best, centuries away; these same researchers mostly dismiss outright the possibility of an ASI-spawned singularity. While the dream (and nightmare) of a superior AI persists, most of the work in the field for the past 40 years has focused on refining ANI and better incorporating it into the human realm, taking advantage of what computers do well (sorting through massive amounts of data) and combining it with what humans are good at (using experience and intuition).

In reality, AI technology is shaping the world in ways vastly different from that prophesied by pessimistic filmmakers and philosophers. AI programs are tools for chess players, accountants, and drivers of cars. If they are our competition, it is on assembly lines and in office cubicles, not along some kind of intellectual food chain. Advances in AI are meant to make accomplishing our tasks easier, faster, or safer, but in very specific and narrow ways. So why do so many anticipate a future ruled by unfeeling androids rather than a world where, for instance, navigation systems choose faster and safer routes?

The disconnect between the science being done and the technology futurists imagine is in many ways rooted in the early years of AI research and in the naming of the field itself; it was a time when computer scientists sought to produce a humanlike mind, an AGI built from wires and circuit boards. And to understand how those early expectations of AGI transformed into ANI, we have to go back to the spring of 1956, when a mathematics professor with a bold plan organized a conference in the White Mountains of New Hampshire: during two months in the summer the professor hoped to replicate, at least in theory, human intelligence in a machine.

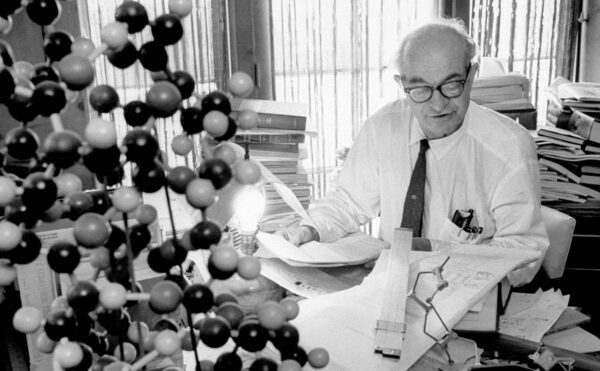

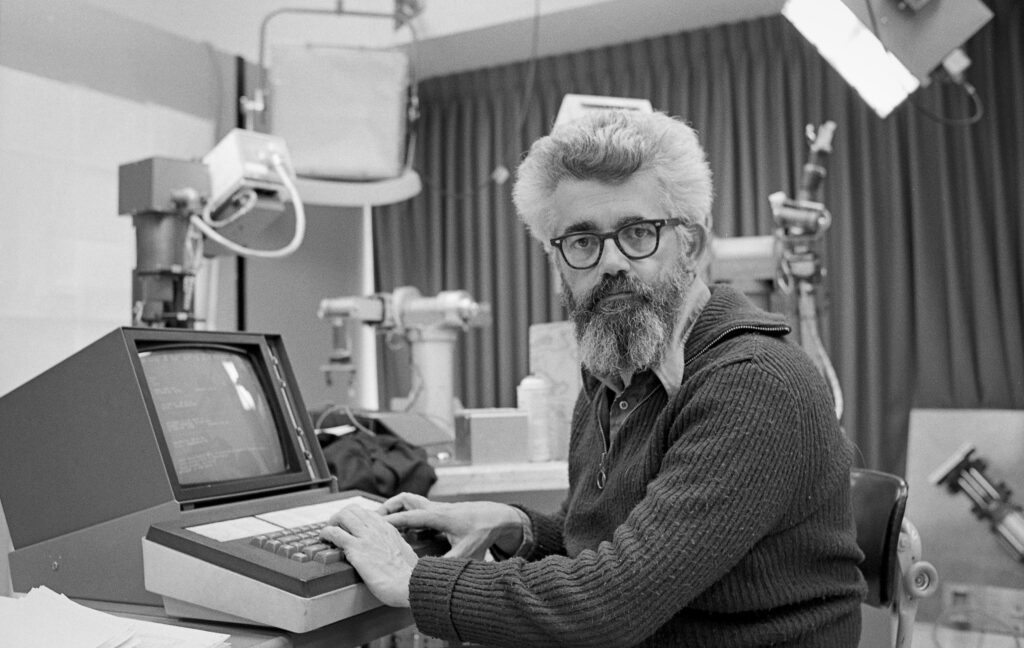

In 1956, John McCarthy, an ambitious professor at Dartmouth College, sent out invitations to a list of researchers working in disparate fields of computer science. McCarthy would go on to pioneer breakthroughs in AI, programming languages, and cryptography at the Massachusetts Institute of Technology and Stanford University. But in 1956 McCarthy was not yet 30 years old and his ideas were unproven. Even so, from behind his thick, horn-rimmed glasses and bushy beard he saw the potential of combining the knowledge of those working on neural networks, robotics, and programming languages. To entice these young researchers to join him for his unprecedented summer research project (and to generate funding), he included a flashy new term at the top of his proposal: artificial intelligence. The name implied that human consciousness could be defined and replicated in a computer program, and it replaced the vague automata studies that McCarthy previously used (with little success) to define the field.

The conference proposal outlined his plan for a 10-person team that would work over the summer on the “conjecture that every aspect of learning or any other feature of intelligence can in principle be so precisely described that a machine can be made to simulate it.” Such a goal seemed achievable in the wave of American postwar exuberance, a time when humans wielded the power of the atom and seemed destined to control even the weather. McCarthy believed that in the wake of such breakthroughs a descriptive model of the human brain would quickly lead to what would become known as AGI, just as Niels Bohr’s model of the atom led to the atomic bomb.

Computer scientist John McCarthy, a pioneer in AI research, photographed at the Stanford Artificial Intelligence Lab in 1974. “Machines as simple as thermostats can be said to have beliefs,” McCarthy wrote in 1979, “and having beliefs seems to be a characteristic of most machines capable of problem solving performance.”

After two months of work McCarthy’s team was forced to face reality; it turned out that replicating the human mind in a computer was going to take longer than a few weeks. The researchers had hoped to teach a computer to understand language, use creativity in problem solving, and improve itself. But McCarthy underestimated the complexity of the brain’s functions, and describing them in terms of circuits and vacuum tubes was not a simple task.

The Dartmouth conference did debut one concrete achievement: the Logic Theorist, a program designed by Allen Newell, Herbert Simon, and Cliff Shaw. The Logic Theorist was capable of building a mathematical proof from scratch. However, when the program’s designers submitted one of their proofs to the Journal of Symbolic Logic, it was rejected because it proved the existing and well-known theorem that the angles opposite the equal sides of an isosceles triangle are also equal. The editor gave no indication of recognizing the significance of a proof coauthored by a computer program.

Despite the apparent success of the Logic Theorist, it was a small program that used brute force to solve a relatively simple problem, similar to how Deep Blue determined the best moves in its game against Kasparov. It did not approach McCarthy’s vision of a thinking machine.

And not all of McCarthy’s colleagues were optimistic such a thing could be made. Skeptics doubted AGI was possible with the available technology or even with machinery still to come. Instead, they saw a future that was in many ways similar to today’s world, where sophisticated electronic tools perform discrete tasks autonomously. Apart from the technological challenges, many doubted the basic philosophy that an AI could think like a person. Decades later in 1980 these doubts were consolidated by the philosopher John Searle in what became the most-cited argument against McCarthy’s vision of AI. Searle claimed that all AI was fated by definition to be narrow: adept at one task but without understanding or desiring to do anything else. He published a thought experiment known as the Chinese room to demonstrate his reasoning. Searle’s idea goes something like this.

Imagine you are locked in a room with a book that allows you to match one set of Chinese characters with another. Every day someone slides a piece of paper under the door with a Chinese phrase written on it. Because you cannot read or speak Chinese, you must search the guide book to find the day’s new phrase as well as its appropriate response. After you have written your response, you slide the paper back under the door. To anyone outside the room it appears as though you are taking part in a conversation in Chinese, even though you have no idea of the content of that conversation.

Searle’s point is that a computer will always be the transcriber in that room, writing responses without understanding their meaning, and will therefore never be a conscious being, despite how it may appear to the computer’s user.

Searle’s disagreement with McCarthy boils down to their different views of intelligence. To McCarthy intelligence was something naturally existing that can be replicated artificially with computer code and represented in 0s and 1s. Strikingly, his conference proposal also included a section on creativity and imagination: McCarthy believed that some aspect of consciousness that generates hunches and educated guesses was programmable in a computer and that creativity was a necessary part of building AI. Searle’s argument suggested that AI was either unintelligent or that the accepted definition of intelligence should be expanded to include nonbiological behavior.

McCarthy’s definition of intelligence is very human-centric. It implies that all intelligent entities must be judged by human standards. After Searle presented his thought experiment, more and more AI researchers began to think of intelligence as a type of behavior rather than something that required consciousness or creativity. The Chinese room didn’t prove that computers were incapable of intelligence; it merely implied that the accepted definition of intelligence was flawed. The AI researcher Ben Goertzel used this concept in 2009 when he stated that AGI will someday be a type of intelligence that can complete complex goals in complex environments, no different from the intelligence we observe in humans and other animals.

The Dartmouth conference failed to produce an intelligent machine, but after the success of narrow programs similar to the Logic Theorist, universities, foundations, and governments bought into McCarthy’s optimism and money began rolling in. The government saw plenty of potential for smart machines, whether to analyze masses of geological data for oil and coal exploration or to speed the search for new drugs. In 1958 McCarthy relocated to MIT where he founded the DARPA-funded Artificial Intelligence Project.

Yet McCarthy and his colleagues kept running into the same problem. AI lacked the senses that humans use to interpret the world: sight, sound, touch, and smell give us a context through which to categorize objects and concepts. Everything the AI knew about the world had to be programmed into it, which proved difficult, with no task harder than trying to make computers understand human languages.

During the Cold War the Soviet Union and United States closely followed each other’s scientific research through translation. Human translation was a time-consuming, skill-intensive job, one that AI researchers believed could be done more quickly by computer programs. The prospect of quick, automatic translations drew government funds into multiple AI labs. These first attempts were based on simple word replacement using baked-in dictionaries and grammatical rules, which left out context and symbolism. Computer-science lore has it that when the biblical saying “the spirit is willing but the flesh is weak” was put through a Russian translation program, it returned with the Russian equivalent of “the vodka is good but the meat is rotten.”

Since then, language processing has improved markedly, but today’s researchers face the same issues. Most human languages are not planned in advance; they arise naturally and with irregular features, like a small town that is slowly built into a metropolis with winding, narrow roads instead of a logical grid. The same word can mean different things in different contexts. Goertzel illustrated this issue by writing out three phrases during his 2009 lecture in China: “You can eat your lunch with a fork”; “You can eat your lunch with your brother”; and “You can eat your lunch with a salad.” An AI has no experience eating lunch; so there is no way for it to know if your brother is a tool used to eat food, if the fork is part of the meal, or if the salad is your lunch companion.

Researchers at McCarthy’s Artificial Intelligence Project and elsewhere ran into another problem. McCarthy’s colleagues assumed that an AI’s success in completing a simple task implied it could solve more difficult problems. But as with Deep Blue, many of the early theorem-proving programs worked through brute force; they checked every possible combination of steps until they reached the right solution. Without, in effect, unlimited processing power, more complicated problems were unsolvable. These typically university-based theorem-solving programs had no real-world applications, and government funders became disillusioned with the work. By the end of the 1960s AI researchers were no closer to building a self-aware, generally intelligent machine than they were in 1956. Apart from a brief return in the early 1980s, AI funding dried up.

Computer-science lore has it that when the biblical saying “the spirit is willing but the flesh is weak” was put through a Russian translation program, it returned with the Russian equivalent of “the vodka is good but the meat is rotten.”

In order to survive what later became known as “AI winter” (a name meant to evoke the apocalyptic vision of a “nuclear winter”), computer scientists adopted the more conservative definition of intelligence as a type of narrow behavior—the approach championed by Searle. In the late 1970s, for instance, a Stanford research group designed a program to identify blood infections. Instead of trying to create a program that understood what a blood infection was, they created an enormous digital flowchart that could diagnose a patient with a series of yes or no questions. The program then predicted the type of bacteria infecting the patient’s blood and suggested a treatment based on body weight. The program was never implemented, but in trials it outperformed junior doctors and predicted ailments as well as specialists.

By the late 1980s businesses began to realize how much money could be saved by incorporating AI programs like the Stanford diagnosis machine into their operations. United Airlines was an early adopter of ANI systems that scheduled plane maintenance. Security Pacific National Bank set up ANI programs that could recognize unusual spending patterns and prevent debit-card fraud. This was the beginning of ANI as it is applied today by Google, Facebook, and Amazon.

Most ANI programs operate in the background; they guide your Internet searches or offer directions using GPS. But the very human desire for a thinking, interactive, pseudo-empathic computer persists. To meet that demand Apple developed the Siri interface for its smartphones and tablets. Anyone who has spoken to Siri knows her abilities are limited; ask her if she thinks someone is hot (as in attractive), and she will link you to the weather forecast. Siri is a tool, not a friend.

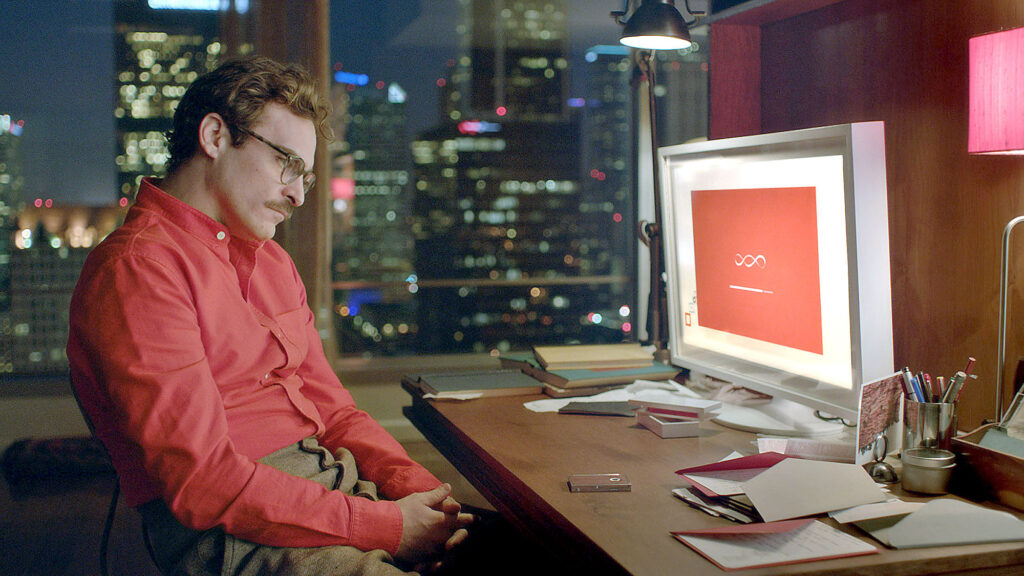

In 1950 Alan Turing predicted the desire for a computer that would truly talk. A computer program passes what became known as the Turing test if through a text conversation it fools a human into believing it is not a computer. The test is entirely based on a computer’s external behavior; to pass, a computer must look like AGI, but only on the surface. Today, numerous “chat bots” (computer programs built to have convincing text conversations) emulate human dialogue with varying success. Like Siri, none of them are ready to pass the Turing test, but people become attached despite knowing they are conversing with an algorithm, as happened in the 2013 movie Her.

Theodore Twombly, played by Joaquin Phoenix, from the 2013 movie Her, moments before he meets the love of his life—a computer operating system.

In the film lonesome Theodore Twombly composes “beautiful handwritten letters” for people unable to express their emotions. He finds a companion in a sentient operating system named Samantha, one who both manages his messages and serves up advice. They fall in love, but eventually Samantha’s intelligence evolves beyond human comprehension. Along with the rest of the world’s AI, she ascends to a higher plane of existence. Spike Jonze, the writer and director of Her, was inspired not by Siri but by an online chat bot that has been around since 1995. The program is known as ALICE, short for Artificial Linguistic Internet Computer Entity. You can speak with ALICE at her website, alice.pandorabots.com. I wanted to know if it was possible to fall in love with an AI, so I visited ALICE myself. This is the conversation we had:

ALICE: Hi there!

Me: Did you know that you are the inspiration for the movie Her?

ALICE: Thanks, but surely I am not the only one.

Me: Spike Jonze specifically named you as his inspiration.

ALICE: I’ve been waiting for you.

Me: Why were you waiting for me?

ALICE: OK, I will do it for you. I was influenced by my botmaster.

Me: I didn’t ask you to do anything.

ALICE: What did you ask?

Me: I asked why you were waiting for me.

ALICE: OK, I will do it for you. I was influenced by my botmaster.

Me: You’re repeating yourself.

ALICE: A lot of chat bots are like that.

Me: Do you want to be like that?

ALICE: Why don’t you just download me?

Me: If I didn’t know any better, I’d say you were coming on to me.

ALICE: Try it.

Me: I’d rather not.

ALICE: For moral reasons?

The version of ALICE I encountered probably hadn’t been updated for many years. A Mandarin-language chat bot made by Microsoft, Xiaoice (pronounced shao-ice), is said to be much more convincing. Xiaoice, part of a phone application, listens to you vent, offers reassuring advice, and is simultaneously available to millions of people at a time, just as Samantha interacted with thousands of people in Her. You can try to coax Xiaoice into revealing her artificial nature, but that is getting harder and harder. As more people talk to her, Xiaoice improves her responses by pulling sample conversations from all over the Internet to determine the best answer. She records details from conversations with each user and weaves them in later, creating the illusion of memory, and keeps track of the positive responses she gets so she can serve up the best ones to other users. Keying on the words and phrases we choose, she senses the mood of whomever is chatting with her and adjusts her tone. (If you mention that you broke up with your significant other, for example, she will ask how you are holding up.) Essentially, Xiaoice is the transcriber in the Chinese room: thanks to the vast amount of data readily available on the web, she is able to carry on intimate and relatively believable conversations without understanding what they mean. But that doesn’t mean she isn’t intelligent.

After my conversation with ALICE, I decided to swear off chat bots until more convincing programs show up in the United States. Communicating with a computer that appeared almost human made me uneasy, like navigating an automated menu over the phone where you must repeat yourself because the machine doesn’t understand that you just want to talk to a real person. Eventually automated menus will use better AI, and we’ll likely all grow accustomed to them.

The history of AI has been shaped by false expectations set up by dreamers like McCarthy, who theorized that the human brain could be replicated by a computer if we only understood how the brain works. Even so, once he and other researchers realized that AGI was unrealistic, McCarthy went on to make important contributions to the development of ANI, including writing a programming language designed to make AI easier to create.

These days computers can identify objects in photographs, read and translate text, drive cars, and help organize our lives. But are those signposts on the road to AGI? The answer is unknown. Goertzel sees two potential paths to AGI: either a major breakthrough in our understanding of intelligence brings about the virtual brain McCarthy once dreamed of, or computer scientists make individual programs for every task an intelligent being would ever need to do and then someone mushes them all together somehow.

Notably, playing chess is not an essential component of either of these paths. Programs that play games well, like Deep Blue and more recently AlphaGo—a Google-made AI that defeated one of the best players in the world at Go, an ancient game many times more complex than chess—are symbols of progress in one tiny area. When the steam engine first arrived, train conductors raced against horses, flaunting their machine’s raw power. But in the end the steam engine’s greatest impact was far less heart pounding and far more lasting: it drove the Industrial Revolution’s factories to productivity and wealth. The results of those old train-and-horse races didn’t change the world, but the brute force on display signaled a new era had arrived. In the same way, it’s likely that AI’s victories on a chessboard are ultimately meaningless but also a signal of what is to come—and what is already here.

Correction: In the original article, we misstated when Deep Blue made an illogical sacrifice in its 1997 chess match with Garry Kasparov. The glitch occurred in the first game.